In the ever-evolving world of technology, containerization has emerged as a groundbreaking solution, revolutionizing the way software applications are developed, deployed, and managed. At the forefront of containerization technology stands Docker, a platform that has become synonymous with this approach. In this comprehensive guide, we will delve into the concept of containerization and explore the capabilities of Docker.

What is Containerization?

Containerization is a method of packaging, distributing, and running applications in lightweight, self-contained environments called containers. Unlike traditional virtual machines, which require a separate operating system for each instance, containers share the host operating system’s kernel, making them more lightweight and efficient. Each container encapsulates the application code, runtime, libraries, and dependencies, ensuring consistency and portability across different environments. In essence, virtualization provides the foundation for running multiple operating systems on a single physical machine, while containerization allows for the efficient deployment and management of applications within those virtualized environments. If you want to learn more about virtualization, see our other article: What is Virtualization?

Understanding Containers:

- containers leverage Linux kernel features like namespaces, cgroups, and chroot for process isolation

- they start from container images

Container Image

- unchangeable static file with executable code to run an isolated process

- image is composed of:

- system libraries

- system tools

- platform settings

- program to run on a containerization platform, such as Docker

- image is compiled from file system layers built onto a parent or base image to not create everything from scratch

namespace

- Linux’s namespaces allows us to have many hierarchies of processes

- process in one subtree cannot access processes in other subtrees

cgroup (control group)

- Linux kernel feature (since version 2.6.24)

- limits and isolates resources usage of a collection of processes

- hierarchical groups of processes, that are bounded by some criteria and have set of limits

chroot

- operation in Unix that changes the apparent root directory for running process and its children

- process cannot access file outside designated directory tree (this environment is called chroot jail)

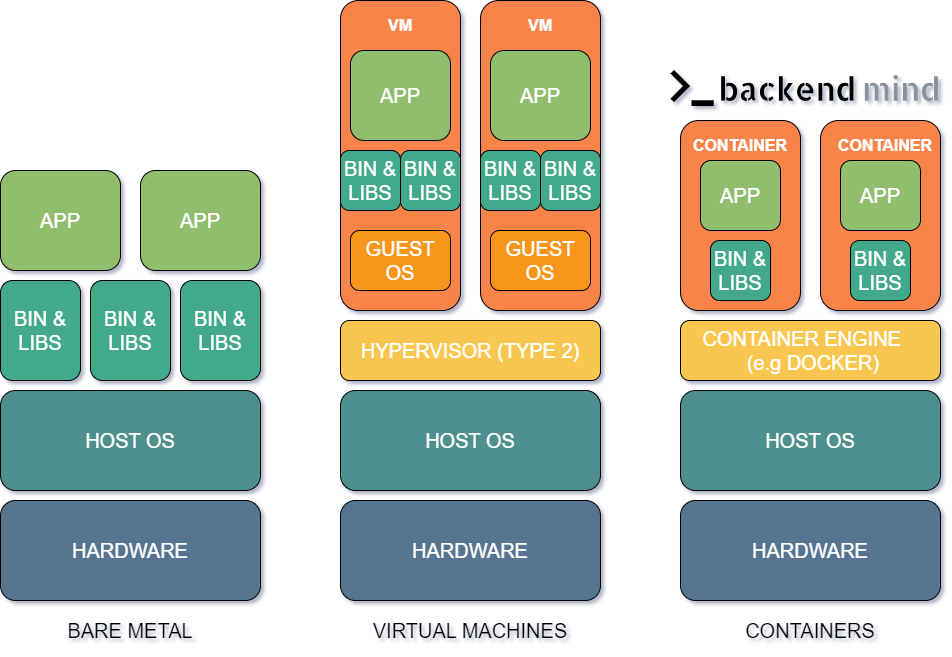

Bare Metal vs VM vs Containers

In the world of deploying applications, there are three main methods: bare metal, virtual machines (VMs), and containers. Each has its own strengths and weaknesses, so let’s break them down in simple terms.

Bare Metal Deployment: Bare metal deployment means running your software directly on physical servers without any abstraction layers.

Pros:

- Performance: Since there are no intermediary layers, bare metal offers maximum performance for your applications.

- Control: You have full control over the hardware, allowing you to optimize it according to your needs.

- Isolation: Your application has dedicated resources without interference from other virtual environments.

Cons:

- Scalability: Scaling can be challenging and time-consuming because you need to procure and set up new hardware.

- Resource Utilization: Hardware resources may not be utilized efficiently if your application doesn’t require all the available capacity.

Virtual Machines (VMs): Virtual machines mimic physical computers but run on a single host machine. Each VM operates independently, with its own operating system and resources.

Pros:

- Isolation: VMs provide strong isolation, ensuring that applications running on different VMs don’t interfere with each other.

- Scalability: Scaling is more flexible compared to bare metal since you can provision new VMs relatively quickly.

- Resource Management: VMs allow you to allocate specific resources to each application, optimizing resource utilization.

Cons:

- Overhead: Running multiple VMs on a single physical server incurs overhead due to the hypervisor layer.

- Performance: VMs may not offer the same level of performance as bare metal because of the virtualization layer.

Containers: Containers are lightweight, portable, and self-sufficient units that package the application and its dependencies.

Pros:

- Portability: Containers can run on any platform that supports containerization, making them highly portable.

- Resource Efficiency: Containers share the host OS kernel, leading to efficient resource utilization and minimal overhead.

- Rapid Deployment: Containers can be deployed and scaled up or down rapidly, facilitating agile development and deployment practices.

Cons:

- Less Isolation: While containers provide isolation, it may not be as strong as VMs, which could lead to potential security risks if not properly configured.

- Complex Networking: Networking between containers and external systems can be complex and requires careful configuration.

In conclusion, the choice between bare metal, virtual machines, and containers depends on your specific requirements. If you prioritize performance and control, bare metal might be the way to go. For flexibility and scalability, virtual machines offer a good balance. And if you value portability and rapid deployment, containers could be the ideal solution for your needs.

Introducing Docker

Docker is a leading platform for containerization, providing developers and IT professionals with tools to build, deploy, and manage containers. At the heart of Docker is the Docker Engine, a lightweight runtime environment that enables the creation and execution of containers. Docker also offers a comprehensive ecosystem of tools and services, including Docker Compose for orchestrating multi-container applications, Docker Swarm for container clustering and orchestration, and Docker Hub for sharing and discovering container images.

Key Features of Docker

-

Portability: Docker containers can run on any system that supports the Docker Engine, providing a consistent environment from development to production.

-

Isolation: Containers are isolated from one another and the underlying host system, ensuring that changes or failures in one container do not affect others.

-

Efficiency: Docker containers are lightweight and start quickly, allowing for rapid deployment and scaling of applications.

-

Flexibility: Docker’s modular architecture and extensive library of pre-built images enable developers to assemble complex applications from reusable components easily.

Benefits of Docker and Containerization

-

Simplified Deployment: Docker streamlines the deployment process, enabling developers to package applications and their dependencies into portable containers that can be deployed across different environments seamlessly.

-

Improved Scalability: With Docker, applications can be scaled up or down dynamically by adding or removing container instances, ensuring optimal resource utilization and performance.

-

Enhanced Collaboration: Docker facilitates collaboration among development teams by providing a consistent development environment that can be easily shared and reproduced.

-

Increased Efficiency: By encapsulating applications and their dependencies, Docker reduces conflicts and compatibility issues, leading to faster development cycles and higher productivity.

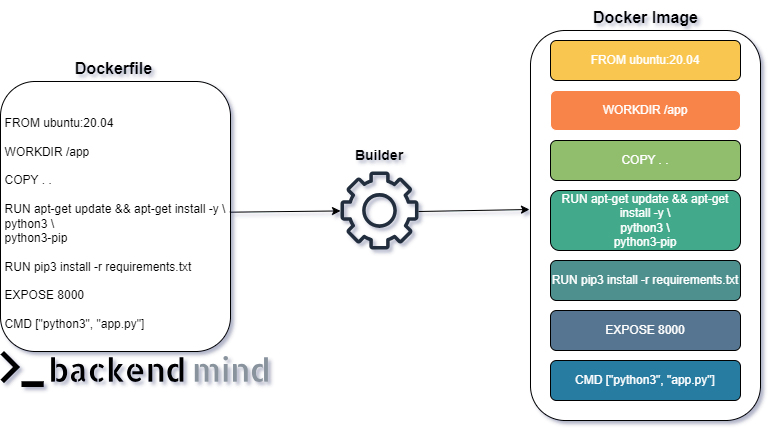

Dockerfile

A Dockerfile is a text file that contains instructions for building a Docker image. It specifies the environment and configuration needed to run an application inside a Docker container. Dockerfiles are used as input by the docker build command to create Docker images automatically.

Example of such file:

# Use an existing base image

FROM ubuntu:20.04

# Set the working directory inside the container

WORKDIR /app

# Copy files from the host machine to the container filesystem

COPY . .

# Install dependencies

RUN apt-get update && apt-get install -y \

python3 \

python3-pip

# Install Python dependencies

RUN pip3 install -r requirements.txt

# Expose a port for communication

EXPOSE 8000

# Define the command to run the application when the container starts

CMD ["python3", "app.py"]

Explanation of each line:

FROM ubuntu:20.04: Specifies the base image to use for building this Docker image. In this case, it’s Ubuntu 20.04.

WORKDIR /app: Sets the working directory inside the container to /app. All subsequent instructions will be executed from this directory.

COPY . .: Copies all files from the current directory (where the Dockerfile is located) on the host machine to the /app directory inside the container.

RUN apt-get update && apt-get install -y python3 python3-pip: Updates the package list inside the container and installs Python 3 and pip3 package manager.

RUN pip3 install -r requirements.txt: Uses pip3 to install Python dependencies specified in a requirements.txt file. This

assumes that there’s a requirements.txt file in the same directory as the Dockerfile.

EXPOSE 8000: Exposes port 8000 on the container. This does not actually publish the port, but it documents that the container

listens on the specified port at runtime.

CMD ["python3", "app.py"]: Specifies the default command to run when the container starts. In this case, it runs a Python

script app.py. This command can be overridden when running the container if needed.

This Dockerfile is a basic example of how to define a Docker image for a Python application. It installs dependencies, sets up the working directory, exposes a port, and specifies the command to run the application.

Docker Image layers

A Docker build consists of a series of ordered build instructions. Each of those instructions within a Dockerfile corresponds roughly to an image layer. They play a crucial role in containerization, offering a streamlined way to package and deploy applications. These layers are like building blocks, each containing a specific set of files and configurations necessary for the application to run. One of the key benefits of Docker layers is their reusability. Since layers are cached, if an image shares layers with another image, Docker can reuse those layers, saving time and disk space. This reusability promotes efficiency and faster deployment of applications.

The order of layers in a Docker image is significant for several reasons. Firstly, layers are stacked on top of each other, with each layer depending on the layers beneath it. Therefore, changing the order can affect dependencies and configurations, potentially leading to errors or inconsistencies. Secondly, the order impacts the caching mechanism. Docker uses layer hashes to determine whether a layer has changed. If a layer changes, Docker invalidates the cache for all subsequent layers. Thus, arranging layers strategically can optimize caching and improve build times.

The most important Docker commands

-

docker run: This command is used to create and start a new container from a Docker image.Example:

docker run -d -p 8080:80 nginxThis command runs a new container in detached mode (-d) based on the NGINX image, mapping port 8080 on the host to port 80 on the container. -

docker ps: Lists all running containers along with their IDs, names, status, and other details. -

docker stop: Stops one or more running containers.Example:

docker stopThis command stops the container with the specified ID. -

docker rm: Removes one or more containers.Example:

docker rmThis command removes the container with the specified ID. -

docker images: Lists all available Docker images along with their repository, tag, and size. -

docker rmi: Removes one or more Docker images.Example:

docker rmiThis command removes the Docker image with the specified ID. -

docker pull: Pulls a Docker image from a registry.Example:

docker pull ubuntuThis command pulls the latest Ubuntu image from the Docker Hub registry. -

docker build: Builds a Docker image from a Dockerfile.Example:

docker build -t myapp .This command builds a Docker image tagged as myapp using the Dockerfile located in the current directory (.). -

docker exec: Runs a command inside a running container.Example:

docker exec -it bashThis command opens an interactive shell (bash) inside the container with the specified ID. -

docker-compose up: Starts services defined in a docker-compose.yml file.Example:

docker-compose up -dThis command starts all services defined in the docker-compose.yml file in detached mode (-d).

In conclusion, Docker and containerization represent a paradigm shift in software development and deployment, offering numerous benefits for businesses seeking agility, scalability, and efficiency in their operations.