HTTP is a stateless protocol that is used to transfer data between a client and a server. It uses the TCP protocol to ensure that data is delivered correctly and in order, thus many ways to improve HTTP connection performance are related to optimizing the underlying TCP connection.

TCP connection

- TCP connection is established between the client and the server before any data is sent

- TCP connection is established using a three-way handshake

- client sends a SYN packet to the server

- the server responds with a SYN-ACK packet

- client sends an ACK packet to the server

- provides reliable and ordered delivery of a stream of bytes.

Techniques to improve HTTP connection performance

- Parallel connections

- Persistent connections

- Connection pooling

- Pipelined connections

- Multiplexed connections.

Parallel connections

- multiple connections are established between the client and the server

- allows downloading multiple (different) resources at the same time.

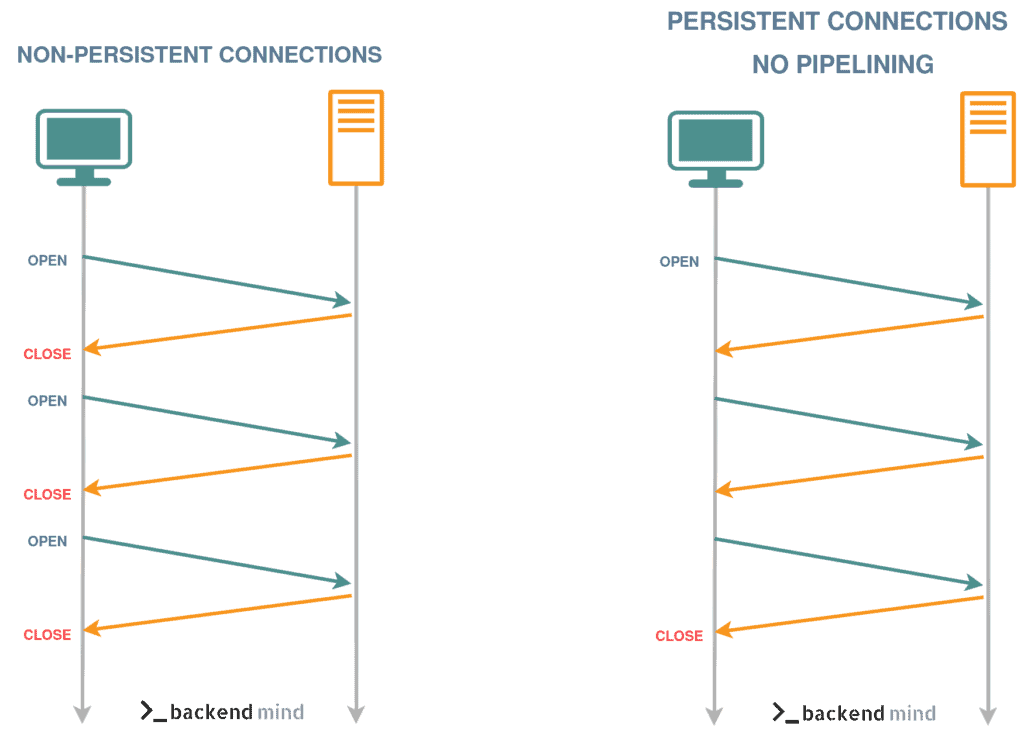

Persistent connections

- connection is kept open after the initial request

- reduces the overhead of establishing a new TCP connection for each request

- reduces resource usage and number of network round trips

- improves latency for requests that use persistent connections.

HTTP without persistent connections

- new connection must be established for each request

- TCP buffers need to be allocated

- TCP handshake needs to be performed.

HTTP with keep-alive

- used in HTTP/1.0 to keep the connection open after the response is sent

- maintained using the

Connection: keep-aliveheader.

HTTP with persistent connections

- the server leaves the TCP connection open after sending the response

- available from HTTP/1.1 by default (no need for

Connection: keep-aliveheader) - connection is closed after a timeout or when the client sends a

Connection: closeheader.

Non-persistent vs persistent connections

Connection pooling

- technique used to reduce the overhead of establishing new connections

- maintains a pool of open connections that can be reused for multiple requests

- pool of concurrent threads that can be used to handle multiple requests simultaneously

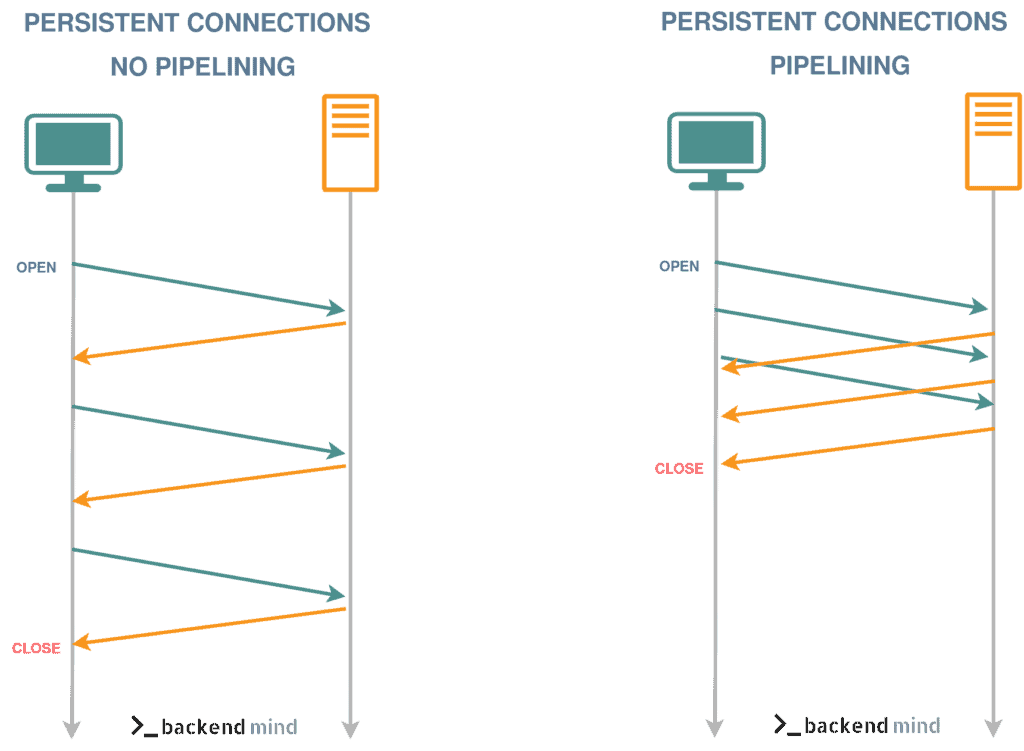

Pipelined connections

- multiple requests are sent without waiting for the response

- happens over a single persistent TCP connection

- introduced in HTTP/1.1

- HTTP responses must be returned in the same order as the requests were received

- introduces the head-of-line blocking problem

- if the server is slow to respond to the first request, all subsequent requests are blocked.

Non-pipelined vs pipelined connections

Multiplexed connections

- multiple requests are sent without waiting for the response

- happens over a single persistent TCP connection

- introduced in HTTP/2

- responses can be returned in any order because they have a unique identifier associated with them

- reduces the head-of-line blocking problem that occurs with pipelined connections.

Usually, the more recent the HTTP version, the better the performance of the connection because of the improvements in the protocol itself.